Monday, April 28, 2014

Trophy papers

Posted by

Unknown

Getting a paper into certain journals is good for one's career. These papers usually represent impressive and important work. It seems that many more such manuscripts are produced than the number that can be published in high-profile journals, such as Nature. It's probably not a bad thing to submit a manuscript to a high-profile journal if you think you have a chance there, but these attempts often generate considerable frustration, for reasons ranging from peculiar formatting requirements to rejection without peer review. Some researchers believe in a piece of work so much that they are not deterred by these frustrations and keep submitting to one high-profile journal after another. This enthusiasm is admirable, but if repeated attempts fail, then the level of frustration can become rather high because of the wasted effort. I wonder how others handle this sort of situation. Do you put more work into the project? Do you submit to an open-access journal? Do you move on to the next desirable target journal and take on the significant non-scientific work, such as figure layout and reference formatting, which a manuscript revision can sometimes entail? Do you wonder if the manuscript is fatally flawed because of the initial attempt to present the findings in a highly concise format? Please share your thoughts and experiences. Should we even be trying to do more than simply sharing our findings?

Sunday, April 13, 2014

Etymology. (Not to be confused with entomology.)

Posted by

Lily

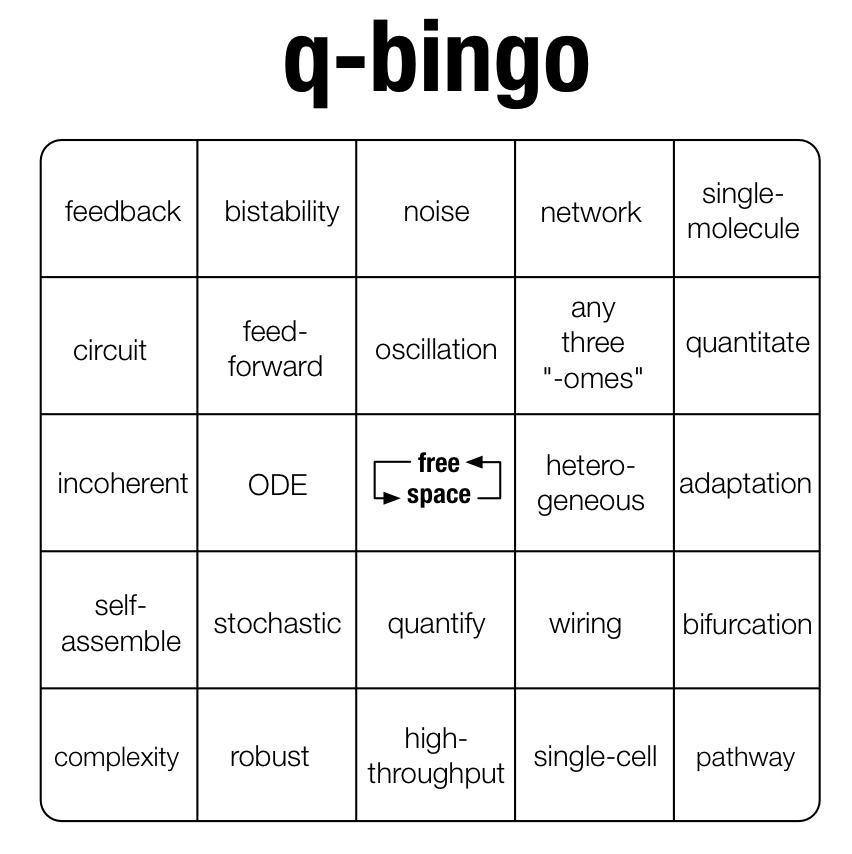

It's time I explained where the name of the blog, "q-bingo", comes from.

It started last year at the q-bio conference, which is a conference focused onquantum quixotic quantitative biology. Like all fields, quantitative biology involves a certain amount of jargon and buzzwords, and certain words crop up more often than they would in everyday conversation.

And where would you hear those words most often? Conferences, of course. In fact, you might start keeping track of how many times certain words come up, and wonder if anyone else is keeping track too...

It started last year at the q-bio conference, which is a conference focused on

And where would you hear those words most often? Conferences, of course. In fact, you might start keeping track of how many times certain words come up, and wonder if anyone else is keeping track too...

And thus, q-bingo was born. Simply cover a square whenever you hear a word used in a talk, and when you fill a straight line shout "q-bingo" straight away. Yes, right there during the talk. [Disclaimer #1: I made this suggestion fully aware that my own talk would be punctuated by a few "bingo"s. Disclaimer #2: There are other examples of such games.] Conference organizers and attendees seemed to love the idea. Sadly, the game didn't quite get off the ground due to the issue of having to print 200+ of these things for everyone at the conference.

On the other hand... At an immunology meeting, I wouldn't necessarily find it noteworthy or funny that people use specialized words like "clonotype" and "Fab fragment". So why did these words jump out at me?

- I think part of the reason is that some of these words are used to create a certain impression rather than to communicate information. For example, the word "complexity" is often to used to throw a veil of sophistication over something, without explaining what makes the topic complex. Same with "network" and "circuit", to some extent.

- Other words, like "incoherent" (as in incoherent feed-forward, which is a simple pattern of interactions/influences) can mean vastly different things to other scientists and to the general public.

- A few words aren't actually objectionable or amusing - they capture ideas that people are excited about at a particular time. There were several talks about the importance of "single-cell" measurements because of cellular "heterogeneity".

I want to hear your feedback. Are these just buzzwords, and should we try to use them less? Or are they signs of a young-ish field finding its own language? And of course... if you have ideas for q-bingo words, let me know in the comments because we might need them again this year.

Friday, April 4, 2014

Leave the gun, take the cannoli

Posted by

Unknown

In the movie The Godfather, Peter Clemenza says to Rocco Lampone, "Leave the gun, take the cannoli." In this post, I want to argue that modelers should leave the sensitivity analysis and related methods, such as bootstrapping and Bayesian approaches for quantifying uncertainties of parameter estimates and model predictions [see the nice papers from Eydgahi et al. (2013) and Klinke (2009)], and take the non-obvious testable prediction. Why should we prefer a non-obvious testable prediction? First, let me say that the methodology mentioned above is valuable. I have nothing against it. I simply want to argue that these analyses are no substitute for a good, non-obvious, testable prediction. Let's consider the Bayesian methods cited above. These methods allow a modeler to generate confidence bounds on not only parameter estimates but also model predictions. That's great. However, these bounds do not guarantee the outcome of an experiment. The bounds are premised on prior knowledge, the data available, which may be incomplete and/or faulty. The same sort of limitation holds for the results of sensitivity analysis, bootstrapping, etc. I once saw a lecturer in the q-bio Summer School tell his audience that no manuscript about a modeling study should pass through peer review without inclusion of results from a sensitivity analysis. That seems like an extreme point of view to me and one that risks elevating sensitivity analysis in the minds of some to something more than it is, something that validates a model. Models can never be validated. They can only be falsified. (After many attempts to prove a model wrong, a model may however become trusted.) The way to subject a model to falsification (and to make progress in science) is to use it to make an interesting and testable prediction.

Subscribe to:

Posts

(

Atom

)